- Use nonlogged bulk copy whenever possible.

The nonlogged bulk copy is much faster than the logged one, but to use it you must provide all the following conditions:- The database option 'select into/bulkcopy' is set to true.

- The target table is not being replicated.

- The TABLOCK hint is specified.

- The target table has no indexes, or if the table has indexes, they are empty when the bulk copy starts.

- Use native mode bulk copy whenever possible.

This can improve performance in comparison with the character mode. - Try to use the BULK INSERT command instead of bcp or DTS to load data into SQL Server.

The BULK INSERT command is much faster than bcp or the data pump to perform text file import operations, however, the BULK INSERT statement cannot bulk copy data from SQL Server to a data file. - Use the bcp utility instead of DTS when you need to export data from the SQL Server table into a text file.

The bcp utility is much faster than DTS, so try to use it whenever possible. - Specify the number of the first and the last row to bulk copy, if you do not need to bulk copy all the rows from the specified data file.

This can result in good performance benefits, because the total amount of data copied will be less. - Specify the number of rows per batch of data copied, if the transaction log was filled before the bulk copy is complete.

Because each batch is copied to the server as one transaction, SQL Server commits or rolls back the transaction for every batch. When you bulk copy large data files, the transaction log can be filled before the bulk copy is complete. In this case, enlarge the transaction log, allow it to grow automatically or specify the number of rows per batch of data copied. - Try to increase the packet_size option.

The packet_size option specifies the number of bytes, per network packet, sent to and from the server. The packet_size can be from 4096 to 65535 bytes with the default of 4096. Increased packet size can enhance performance of bulk copy operations. Try to set the packet_size option to 8192 bytes and continue monitoring. - Use the ORDER hint, if the clustered index exists on the table and the data file is sorted according to the clustered index.

This can significantly improve performance of the bulk copy operation, because SQL Server will load data in the clustered index order without any reorder operations. - If you create a new table and bulk copy data into it, try to bulk load data first and only after that create any indexes.

This can significantly improve performance of the bulk copy operation, because data will be loaded into the SQL Server table without any index pages creation during the bulk copy. - If you load data into an empty table with the existing nonclustered indexes, try to drop the nonclustered indexes, bulk load data and only after that re-create the nonclustered indexes.

This can significantly improve performance of the bulk copy operation, because data will be loaded into the SQL Server table without any index pages creation during the bulk copy. - If you load data into a nonempty table with the existing clustered and/or nonclustered indexes, and the amount of data added is large, it can be faster to drop all indexes on the table, perform the bulk copy operation, and then re-create the indexes after the data is loaded.

Check the time needed to load data with dropping/re-creating indexes and without dropping/re-creating indexes on your test server before running the bulk copy operation on the production server. - If your SQL Server box has multiple CPUs, try to divide loaded data into two or more sources and run multiple instances of bcp on separate clients to load data in parallel.

Because SQL Server allows data to be bulk copied into a single table from multiple clients in parallel using the bcp utility or BULK INSERT statement, try to use parallel data loads whenever possible. To bulk copy data into SQL Server in parallel, you must provide all the following conditions:- The database option 'select into/bulkcopy' is set to true.

- The TABLOCK hint is specified.

- The target table does not have any indexes.

- Specify the TABLOCK hint, if you bulk copy data into an empty table from a single client.

This can improve performance of the bulk copy operation, because this causes a table-level lock to be taken for the duration of the bulk copy operation. - Try to avoid using CHECK_CONSTRAINTS and FIRE_TRIGGERS hints.

Using these hints can significantly degrade performance of the bulk copy operation, because for each row loaded the constraints and insert triggers defined on the destination table will be executed.

How To......?

It`s a sample page to try SQL in life

Sunday, 14 August 2016

SQL Server Bulk Copy Optimization Tips

Introduction to SQL Server Stretch Database

In many cases Azure SQL Database offers an economically and functionally viable alternative to SQL Server deployments. However, there are also scenarios where we might discover that rather than serving as a replacement, it provides synergy, working side by side with your on-premises databases. One of technologies that illustrate this paradigm is Stretch Database, introduced in SQL Server 2016. We will describe its basic characteristics and review its implementation steps in this article.

As the name indicates, the primary purpose of the Stretch Database is to implement a cross-premises database, with some of its content hosted in an on-premises instance of SQL Server 2016 and the reminder residing in an Azure SQL Database. Despite splitting data across two locations, you do not have to modify the way it is accessed, effectively eliminating the need to update existing database-dependent applications. At the same time, you can take advantage of easily scalable cloud compute and storage resources. As a side benefit, you can minimize duration of an on-premises maintenance window by reducing the amount of data to be backed up. Data migrated to Azure remains protected by automatic backups available as part of the standard Azure SQL Database offering. From the security standpoint, Stretch Database supports the range of features incorporated into SQL Server 2016 and Azure SQL Database V12, including Always Encrypted, Row Level Security, and Transparent Data Encryption.

Before you start planning implementation of Stretch Database you should first determine whether your data and its usage patterns comply with its requirements. There are a number of potentially blocking issues and limitations applicable to Stretch Database that you need to consider. Some of these limitations result from restrictions imposed by the capabilities of Azure SQL Database, such as lack of support for tables that contain FILESTREAM data; however there are number of others that are Stretch Database-specific. For example, there is currently no support for text, ntext, XML, or CLR user-defined data types, default and check constraints, or full-text and XML indexes. You should also note that You should also note that updating or deleting rows that have been either migrated to Azure SQL Database or designated as eligible for migration is not supported at this time. In addition, keep in mind that unique and primary keys are not enforced for data transferred to Azure. For a comprehensive list of the limitations of Stretch Database, refer to the Azure Documentation.

Once you have confirmed that your database and its tables do not violate any of the Stretch Database prerequisites, you need to apply the following configuration changes in order to migrate cold data to Azure:

- Enable the Stretch Database functionality on the SQL Server 2016 instance hosting the database you intend to stretch. This is accomplished by configuring the remote data archive server configuration option, which you can apply by running the following:

USE master EXEC sp_configure 'remote data archive', '1'; GO RECONFIGURE; GO

- Enable stretch for the database. This is accomplished by performing the following steps:

- Create a database master key, which will be used to secure credentials necessary to connect to the Azure SQL Database:

USE <database>; GO CREATE MASTER KEY ENCRYPTION BY PASSWORD='<password>'; GO

- Create the credentials to connect to the Azure SQL Database:

CREATE DATABASE SCOPED CREDENTIAL <credential_name> WITH IDENTITY = '<identity>' , SECRET = '<secret>' ; GO - Configure the database by enabling the remote data archive option. This requires providing the credentials you created in the previous step along with the name of the server hosting the Azure SQL Database to which cold data will be migrated.

CREATE DATABASE SCOPED CREDENTIAL <credential_name> WITH IDENTITY = '<identity>' , SECRET = '<secret>' ; GO ALTER DATABASE <database name> SET REMOTE_DATA_ARCHIVE = ON ( SERVER = '<server_name>' , CREDENTIAL = <credential_name> ) ; GO

- Create a database master key, which will be used to secure credentials necessary to connect to the Azure SQL Database:

- Select at least one table within the database to be included in the stretch. You can either migrate all of data contained with a table to Azure or create a filter function that limits the scope of migration to a subset of table rows. Likely candidates for stretch are large tables with a significant amount of historical data, such as history tables that accompany temporal tables introduced in SQL Server 2016. While you might have a good idea which tables to choose based on the knowledge of data usage patterns in your environment, you have the option of leveraging Stretch Database Advisor included in the SQL Server 2016 Upgrade Advisor (available from Microsoft Downloads), which will automatically identify the most optimal options for you. You can also use it to determine if any of them is a subject to the blocking issues we mentioned earlier. To enable stretch for an individual table, you can run the following:

ALTER TABLE <table_name> SET ( REMOTE_DATA_ARCHIVE = ON ( MIGRATION_STATE=OUTBOUND ));

The simplest way to implement these tasks relies on the Enable Database for Stretch Wizard. You can invoke it directly from the Object Explorer window within the SQL Server Management Studio by right-clicking on the database you intend to stretch and selecting Tasks->Stretch->Enable from the context sensitive menu. The wizard will automatically identify tables eligible for stretch and allow you to create a filter function to limit the scope of the data to be migrated. Next, it will prompt you to sign in to your Azure subscription and select the target Azure region. At that point, you will need to either provision a new server to host the Azure SQL database or designate an existing one. You will also be asked to provide a strong password to encrypt the database master key (DMK), which in turn is used to encrypt your SQL Server database credentials. In addition, the wizard will allow you to specify a range of IP addresses to be included in the server firewall rules, in order to permit connectivity from the on-premises SQL Server instance. Finally, on the summary page, you will find an estimated monthly cost resulting from the stretch.Pricing of Stretch Database is determined by three factors - compute, storage, and egress data transfers (out of Azure). The first of them is expressed in so called Database Stretch Units (DSUs) and ranges from 100 DSUs to 6000 DSUs. DSUs offer relative measurement of computing resources assigned to the Azure SQL Database, which translates into the speed of query processing and migration of cold data. The storage cost is calculated based on the price per GB per month, with database size and its backups contributing towards the total storage amount. The amount of storage is also affected by the resiliency setting you choose for the Azure SQL Database. In particular, selecting geo-redundant replica will incur extra cost when compared with local redundancy.

SQL Server auto update statistics VS auto update statistics asynchronously

How to find auto update statistics and auto update statistics asynchronously options in SSMS:

Right click on your database–> Go to Properties…> options

The query optimizer uses statistics to create query plans that improve query performance.

AUTO_UPDATE_STATISTICS Option

As from snapshot above, by default its value is true.

When the automatic update statistics option, AUTO_UPDATE_STATISTICS, is on, the query optimizer determines when statistics might be out-of-date and then updates them when they are used by a query. Statistics become out-of-date after insert, update, delete, or merge operations change the data distribution in the table or indexed view. The query optimizer determines when statistics might be out-of-date by counting the number of data modifications since the last statistics update and comparing the number of modifications to a threshold. The threshold is based on the number of rows in the table or indexed view.

The query optimizer checks for out-of-date statistics before compiling a query and before executing a cached query plan. Before compiling a query, the query optimizer uses the columns, tables, and indexed views in the query predicate to determine which statistics might be out-of-date. Before executing a cached query plan, the Database Engine verifies that the query plan references up-to-date statistics.

The AUTO_UPDATE_STATISTICS option applies to statistics objects created for indexes, single-columns in query predicates, and statistics created with the CREATE STATISTICS statement. This option also applies to filtered statistics.

Auto Update Statistics Asynchronously

The default setting for this option is disabled.

The asynchronous statistics update option, AUTO_UPDATE_STATISTICS_ASYNC, determines whether the query optimizer uses synchronous or asynchronous statistics updates. By default, the asynchronous statistics update option is off, and the query optimizer updates statistics synchronously. The AUTO_UPDATE_STATISTICS_ASYNC option applies to statistics objects created for indexes, single columns in query predicates, and statistics created with the CREATE STATISTICS statement.

Statistics updates can be either synchronous (the default) or asynchronous. With synchronous statistics updates, queries always compile and execute with up-to-date statistics; when statistics are out-of-date, the query optimizer waits for updated statistics before compiling and executing the query. With asynchronous statistics updates, queries compile with existing statistics even if the existing statistics are out-of-date; the query optimizer could choose a suboptimal query plan if statistics are out-of-date when the query compiles. Queries that compile after the asynchronous updates have completed will benefit from using the updated statistics.

Consider using synchronous statistics when you perform operations that change the distribution of data, such as truncating a table or performing a bulk update of a large percentage of the rows. If you do not update the statistics after completing the operation, using synchronous statistics will ensure statistics are up-to-date before executing queries on the changed data.

Consider using asynchronous statistics to achieve more predictable query response times for the following scenarios:

- Your application frequently executes the same query, similar queries, or similar cached query plans. Your query response times might be more predictable with asynchronous statistics updates than with synchronous statistics updates because the query optimizer can execute incoming queries without waiting for up-to-date statistics. This avoids delaying some queries and not others.

- Your application has experienced client request time outs caused by one or more queries waiting for updated statistics. In some cases, waiting for synchronous statistics could cause applications with aggressive time outs to fail.

How to Get Started with Big Data?

Big data is one of the most popular subject in recent time and everybody wants to get started on this subject. During recent interviews there are plenty of the questions with related to Big Data. Here is the most popular question which I receive on this subject.

Question: How to get started with Big Data?

Answer: Earlier last year I wrote timeless series on the subject Big Data. Here is the link to the entire series.

Big Data – Big Thing!

Big Data is becoming one of the most talked about technology trends nowadays. The real challenge with the big organization is to get maximum out of the data already available and predict what kind of data to collect in the future. How to take the existing data and make it meaningful that it provides us accurate insight in the past data is one of the key discussion points in many of the executive meetings in organizations. With the explosion of the data the challenge has gone to the next level and now a Big Data is becoming the reality in many organizations.

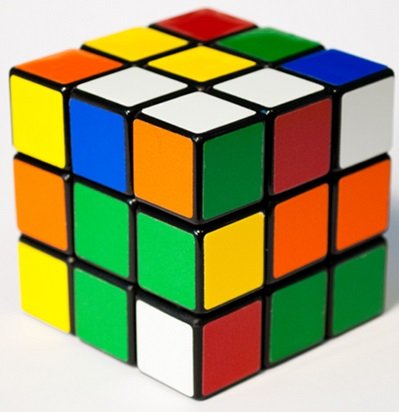

Big Data – A Rubik’s Cube

I like to compare big data with the Rubik’s cube. I believe they have many similarities. Just like a Rubik’s cube it has many different solutions. Let us visualize a Rubik’s cube solving challenge where there are many experts participating. If you take five Rubik’s cube and mix up the same way and give it to five different expert to solve it. It is quite possible that all the five people will solve the Rubik’s cube in fractions of the seconds but if you pay attention to the same closely, you will notice that even though the final outcome is the same, the route taken to solve the Rubik’s cube is not the same. Every expert will start at a different place and will try to resolve it with different methods. Some will solve one color first and others will solve another color first. Even though they follow the same kind of algorithm to solve the puzzle they will start and end at a different place and their moves will be different at many occasions. It is nearly impossible to have a exact same route taken by two experts.

I like to compare big data with the Rubik’s cube. I believe they have many similarities. Just like a Rubik’s cube it has many different solutions. Let us visualize a Rubik’s cube solving challenge where there are many experts participating. If you take five Rubik’s cube and mix up the same way and give it to five different expert to solve it. It is quite possible that all the five people will solve the Rubik’s cube in fractions of the seconds but if you pay attention to the same closely, you will notice that even though the final outcome is the same, the route taken to solve the Rubik’s cube is not the same. Every expert will start at a different place and will try to resolve it with different methods. Some will solve one color first and others will solve another color first. Even though they follow the same kind of algorithm to solve the puzzle they will start and end at a different place and their moves will be different at many occasions. It is nearly impossible to have a exact same route taken by two experts.Big Market and Multiple Solutions

Big Data is exactly like a Rubik’s cube – even though the goal of every organization and expert is same to get maximum out of the data, the route and the starting point are different for each organization and expert. As organizations are evaluating and architecting big data solutions they are also learning the ways and opportunities which are related to Big Data. There is not a single solution to big data as well there is not a single vendor which can claim to know all about Big Data. Honestly, Big Data is too big a concept and there are many players – different architectures, different vendors and different technology.

Evolution of Big Data

Data in Flat File

In earlier days data was stored in the flat file and there was no structure in the flat file. If any data has to be retrieved from the flat file it was a project by itself. There was no possibility of retrieving the data efficiently and data integrity has been just a term discussed without any modeling or structure around. Database residing in the flat file had more issues than we would like to discuss in today’s world. It was more like a nightmare when there was any data processing involved in the application. Though, applications developed at that time were also not that advanced the need of the data was always there and there was always need of proper data management.

In earlier days data was stored in the flat file and there was no structure in the flat file. If any data has to be retrieved from the flat file it was a project by itself. There was no possibility of retrieving the data efficiently and data integrity has been just a term discussed without any modeling or structure around. Database residing in the flat file had more issues than we would like to discuss in today’s world. It was more like a nightmare when there was any data processing involved in the application. Though, applications developed at that time were also not that advanced the need of the data was always there and there was always need of proper data management.Edgar F Codd and 12 Rules

Edgar Frank Codd was a British computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases. He presented 12 rules for the Relational Database and suddenly the chaotic world of the database seems to see discipline in the rules. Relational Database was a promising land for all the unstructured database users. Relational Database brought into the relationship between data as well improved the performance of the data retrieval. Database world had immediately seen a major transformation and every single vendors and database users suddenly started to adopt the relational database models.

Edgar Frank Codd was a British computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases. He presented 12 rules for the Relational Database and suddenly the chaotic world of the database seems to see discipline in the rules. Relational Database was a promising land for all the unstructured database users. Relational Database brought into the relationship between data as well improved the performance of the data retrieval. Database world had immediately seen a major transformation and every single vendors and database users suddenly started to adopt the relational database models.Relational Database Management Systems

Since Edgar F Codd proposed 12 rules for the RBDMS there were many different vendors who started them to build applications and tools to support the relationship between database. This was indeed a learning curve for many of the developer who had never worked before with the modeling of the database. However, as time passed by pretty much everybody accepted the relationship of the database and started to evolve product which performs its best with the boundaries of the RDBMS concepts. This was the best era for the databases and it gave the world extreme experts as well as some of the best products. The Entity Relationship model was also evolved at the same time. In software engineering, an Entity–relationship model (ER model) is a data model for describing a database in an abstract way.

Enormous Data Growth

Well, everything was going fine with the RDBMS in the database world. As there were no major challenges the adoption of the RDBMS applications and tools was pretty much universal. There was a race at times to make the developer’s life much easier with the RDBMS management tools. Due to the extreme popularity and easy to use system pretty much every data was stored in the RDBMS system. New age applications were built and social media took the world by the storm. Every organizations was feeling pressure to provide the best experience for their users based the data they had with them. While this was all going on at the same time data was growing pretty much every organization and application.

Well, everything was going fine with the RDBMS in the database world. As there were no major challenges the adoption of the RDBMS applications and tools was pretty much universal. There was a race at times to make the developer’s life much easier with the RDBMS management tools. Due to the extreme popularity and easy to use system pretty much every data was stored in the RDBMS system. New age applications were built and social media took the world by the storm. Every organizations was feeling pressure to provide the best experience for their users based the data they had with them. While this was all going on at the same time data was growing pretty much every organization and application.Data Warehousing

The enormous data growth now presented a big challenge for the organizations who wanted to build intelligent systems based on the data and provide near real time superior user experience to their customers. Various organizations immediately start building data warehousing solutions where the data was stored and processed. The trend of the business intelligence becomes the need of everyday. Data was received from the transaction system and overnight was processed to build intelligent reports from it. Though this is a great solution it has its own set of challenges. The relational database model and data warehousing concepts are all built with keeping traditional relational database modeling in the mind and it still has many challenges when unstructured data was present.

Interesting Challenge

Every organization had expertise to manage structured data but the world had already changed to unstructured data. There was intelligence in the videos, photos, SMS, text, social media messages and various other data sources. All of these needed to now bring to a single platform and build a uniform system which does what businesses need. The way we do business has also been changed. There was a time when user only got the features what technology supported, however, now users ask for the feature and technology is built to support the same. The need of the real time intelligence from the fast paced data flow is now becoming a necessity.

Every organization had expertise to manage structured data but the world had already changed to unstructured data. There was intelligence in the videos, photos, SMS, text, social media messages and various other data sources. All of these needed to now bring to a single platform and build a uniform system which does what businesses need. The way we do business has also been changed. There was a time when user only got the features what technology supported, however, now users ask for the feature and technology is built to support the same. The need of the real time intelligence from the fast paced data flow is now becoming a necessity.

Large amount (Volume) of difference (Variety) of high speed data (Velocity) is the properties of the data. The traditional database system has limits to resolve the challenges this new kind of the data presents. Hence the need of the Big Data Science. We need innovation in how we handle and manage data. We need creative ways to capture data and present to users.

Big Data is Reality!

How to Drop Clustered Index on Primary Key Column?

Question: How to drop clustered index, which is created on primary key?

Answer: If you thought the answer as simple as following script, you are wrong.

DROP INDEX PK_Table1_Col1

ON Table1

GO

When you run above script on Table1 where PK_Table1_Col1 is clustered index it will throw an error.

Msg 3723, Level 16, State 4, Line 26

An explicit DROP INDEX is not allowed on index ‘Table1.PK_Table1_Col1’. It is being used for PRIMARY KEY constraint enforcement.

An explicit DROP INDEX is not allowed on index ‘Table1.PK_Table1_Col1’. It is being used for PRIMARY KEY constraint enforcement.

Here are two blog posts which are related to the concept described in this blog post, I suggest you read them before you continue with this blog post as that will help you understand the subject of Primary Key and Clustered Index a bit more in detail.

So question still remains, How do we drop clustered index on primary key column?

The answer is very simple, but first we will go over the entire script which will demonstrate to us that we have created a clustered index and primary key on the table.

First, let us create a table.

1

2

3

4

5

6

7

8

| -- Create TableCREATE TABLE Table1(Col1 INT NOT NULL,Col2 VARCHAR(100)CONSTRAINT PK_Table1_Col1 PRIMARY KEY CLUSTERED (Col1 ASC))GO |

Next, check if table has a primary key and clustered index on the same column with the help of following a script.

1

2

3

4

5

6

| -- Check the Name of Primary KeySELECT nameFROM sys.key_constraints WHERE type = 'PK' AND OBJECT_NAME(parent_object_id) = N'Table1'GO |

1

2

3

4

5

6

7

| -- Check the Clustered Index SELECT OBJECT_NAME(object_id),nameFROM sys.indexes WHERE OBJECTPROPERTY(object_id, 'IsUserTable') = 1 AND type_desc='CLUSTERED' AND OBJECT_NAME(object_id) = N'Table1'GO |

Now let us attempt to drop a clustered index with drop script, which will give an error.

1

2

3

4

| -- Drop Clustered IndexDROP INDEX PK_Table1_Col1 ON Table1GO |

The script listed above will give us following error.

Msg 3723, Level 16, State 4, Line 26

An explicit DROP INDEX is not allowed on index ‘Table1.PK_Table1_Col1’. It is being used for PRIMARY KEY constraint enforcement.

An explicit DROP INDEX is not allowed on index ‘Table1.PK_Table1_Col1’. It is being used for PRIMARY KEY constraint enforcement.

Now it is clear that we are not able to drop the clustered index as there is a primary key. Now if you want to drop a clustered index, you will have to drop it with the help of following script where we drop constraint on the same column.

1

2

3

4

| -- Drop ConstraintALTER TABLE Table1DROP CONSTRAINT PK_Table1_Col1GONow let us run following script and double check that table does not have either primary key or clustered index.

|

Subscribe to:

Comments (Atom)